You're thinking about starting your own business. Maybe you just started it and built a proof-of-concept or MVP and now you're wondering how to move on in terms of cloud infrastructure. This article will explain how cloud infrastructure can work for you at various stages of your business.

It all starts with a couple of questions.

First, let's look at a few questions that you might want to ask yourself before you begin your journey:

- How do I choose a cloud provider? Do we have internal expertise in any of the major cloud providers (AWS, Azure, GCP)?

- In what regions should my services be run, and what number of environments are planned for the initial stage (e.g. development, staging, production)?

- How stable and predictable will our platform's load be? Will there be any spikes?

- How important is it for us to manage and maintain our own infrastructure (databases, messaging brokers, etc.), or do we prefer cloud-managed solutions?

- What is our plan for building & deploying applications, both in terms of tools and strategy?

- During the initial phase, what level of downtime can we afford?

- Are we okay with having some basic security that can be improved later or do we need advanced security features from the beginning?

- Are we OK with basic monitoring features and basic infrastructure setup or do we prefer something more advanced?

Relax, you got it.

Before you get overwhelmed by all the options, here's a very useful rule of thumb: start small and modular. Infrastructure components can be easily added, or removed as you go.

Building your first solid infrastructure

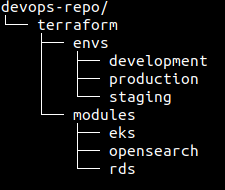

Starting with AWS is a reasonable and safe choice (never use root credentials or root account for any operations though!). Then have experts create an initial Terraform setup (Infrastructure as a Code tool) where modules are defined (for each service that you will be running, e.g. an EKS Kubernetes cluster, an RDS database, an OpenSearch, etc.) and invoke from environment files or folders so that the basic structure is as follows:

With a structure like this, you can easily control which resources are deployed in which environment simply by adding small .tf files to the appropriate environment folder, such as development, staging, or production.

Since these are just configuration files, they don't contain any actual logic for deploying them. This way, modules can be reused and implementation details can be hidden from end users, allowing them to focus on the end result rather than how Terraform works. For instance, you could decide that “my RDS database running Postgres will be named ‘my-db-postgres’ and it will run on a ‘db.m5.large’ machine” and provide that info via a simple config file which doesn’t overwhelm you with Terraform specifics.

What about location?

Location or region should be chosen based on proximity to your current and potential user base. Just keep in mind that different regions have different pricing and not all regions offer all AWS services.

Do you need Kubernetes?

It is possible that you already determined where and how your services will run in the first step. However, if you did not, now is the time for you to decide. We recommend running most of your services in Kubernetes clusters, in this case using AWS's EKS service, which you can set up via Terraform.

There is a substantial amount of knowledge and skill required to run K8s and services in it, especially during the initial setup phase. But once it's set up, it makes life easier for developers and the teams who support your stack. It has a wide range of options related to scaling, healing, keeping things secure, and many others.

Adding functionalities to the K8s cluster

As soon as you have your base K8s cluster running, you should add a few components that will provide additional functionality, such as:

- kube2iam, which provides IAM credentials to containers running in a Kubernetes cluster

- External-DNS to synchronize exposed Kubernetes Services and Ingresses with DNS provider

- The ingress controller can be either Nginx, Traefik, or Kong. You can read more about ingress controllers in our post here.

- Cert-manager, which adds certificates and certificate issuers as resource types and automates certificate issuing and renewal

- Cluster-autoscaler, which automatically adjusts the size of Kubernetes clusters according to a set of criteria

- ECR-credentials, which automates the authentication process to ECR

- Velero for backup and restoration of K8s resources and persistent volumes

- Vault for secrets and encryption management system

It's now time to deploy your apps. If your code is hosted on GitLab, you can use the CI/CD system built in GitLab, and write pipelines in YAML format (files called .gitlab-ci.yaml).

Pipelines should follow the same principle of keeping it simple, meaning as few steps as possible. It should build, deploy to development on every push or on merge into the main branch, and deploy to production manually or by tagging specific releases.

As there are several potential security concerns in integrating your CI/CD pipelines with the K8s cluster, we recommend handing this part over to experts.

The most common method of deploying into K8s is via native K8s manifest files or using Helm as a package manager. In this case, your apps will be packaged as Helm charts. This is our preferred option. For one app to be packaged using a generic helm chart (and deployed in the K8s environment), small values files are needed, in order to control how the generic helm chart is templated.

Once this is implemented and your CI/CD pipelines are deploying services, you need to make sure that Service/Ingress resources provide the least privileged access to applications.

We're not going to list all the resources and features that are needed to set up applications, but just mention a few: Readiness, Liveness, Startup probes (r/l/s probes), Horizontal Pod Autoscaling (HPA), and Pod Disruption Budget (PDB). These features are there to provide better availability & scalability of services and to ensure optimal deployments in general.

You don’t know if you can’t see.

With applications up and running, it's time to start collecting metrics and logs to provide insight into behaviors. Prometheus, Grafana, and Alertmanager are our preferred monitoring tools, and the ELK stack is our preferred logging tool. We highly recommend DataDog for monitoring & logging if you have a budget and like a nice user interface.

Keeping backups for your databases and Kubernetes clusters is important to maintain in the event of equipment failure or catastrophe. We recommend Velero for Kubernetes backups. This tool allows you to select what resources to backup and when to backup them as well as where to store them. You can backup persistent volumes and restore whole Kubernetes clusters with just a few commands. Velero can also be used for migrations between clusters in addition to disaster recovery and recovery.

Based on the type of database you are using, there are multiple tools available for database backups. As a standard, cloud platforms provide out-of-the-box backups for databases, but you can also use solutions like AWS Backup, which allows you to configure backups for different kinds of cloud services (EC2, DynamoDB, EFS, DocumentDB, or Neptune).

This is it.

Congrats, you now have a reasonably solid foundation for future expansion and are ready for continuous support.

This is a fairly simple representation of the cloud infrastructure stack and its components (many topics are not covered for simplicity's sake). Our team at Devolut has built dozens of infrastructures from scratch. If you'd like to learn more about how to build and maintain a scalable system at your company, just say hi 👋 at hello@devolut.io and we'll gladly guide you along the way.